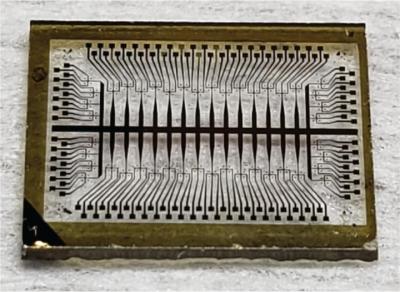

An array of artificial neurons on a device. Image credit: Sangheon Oh/ Nature Nanotechnology

Training neural networks to perform tasks, such as recognizing images or navigating self-driving cars, could one day require less computing power and hardware thanks to a new artificial neuron device developed by researchers at the University of California San Diego. The device can run neural network computations using 100 to 1000 times less energy and area than existing CMOS-based hardware.

Neural networks are a series of connected layers of artificial neurons, where the output of one layer provides the input to the next. Generating that input is done by applying a mathematical calculation called a non-linear activation function. This is a critical part of running a neural network. But applying this function requires a lot of computing power and circuitry because it involves transferring data back and forth between two separate units—the memory and an external processor.

Now, UC San Diego researchers have developed a nanometer-sized device that can efficiently carry out the activation function.